The annual Google I/O developer conference was held as scheduled on May 10, local time in the United States. Google has always been a pioneer in the I/O conference to showcase the most cutting-edge AI technology. But this year, the AI giant's posture is a little different from the past.

Although the rhythm of this conference Google released new technologies has not changed, launched PaLM 2 big model, Bard upgrade, and Gemini multimodal model. But in the eyes of outsiders, these technologies seem to have less novelty and creativity and more anxiety of catching up. After all, in this wave of AI big models set off by Microsoft and OpenAI, as long as they are not first movers, it looks like they are running after Microsoft's back.

In fact, Google has never been slow to run on the AI track, the last two years of the Google I/O conference has not left the AI, this year's conference AI flavor is also a little thicker, not only Bard, Workspace, these Microsoft has laid out the product track, Google will also be generative AI into the Android.

Bing has always been seen as an entry point for Microsoft to make a big model, an almost perfect combination. But for Google, its killer app is instead not search, which has a much larger user base, but Android. Ecology seems to have given Google a solid moat. But in the AI competition, this kind of stag losing how, with the iterative evolution of technology, still need time to verify.

Search is not the only bottom card of the big model, and how long can the ecological advantage keep companies ahead?

How important is first-mover advantage to Google search

The moment Microsoft gave the GPT capability to Bing, it was the equivalent of declaring war on Google. And the moment Google launched Bard, it naturally took up the challenge.

At this conference, the PaLM 2 big model was released, which is also widely considered by the industry as a product to benchmark GPT-4.

As a matter of fact, the number of PaLM model parameters shown at the 2022 I/O conference has reached 540 billion, which is more than 2 times more than the GPT-3, the main product of OpenAI at that time, by looking at the number of parameters alone. And this year's advanced version - PaLM 2, moreover, has significantly improved in mathematical ability, code writing, logical reasoning, and multilingual ability.

With the upgrade of the basic large model, Bard, the large language model (LLM) conversational bot used in Google's search engine, has also undergone some upgrades, with a new citation function to make the content more accurate. There is also an export function, you can export the results to Gmail or Docs in one click.

In the future, Bard will also integrate with other Google applications (Maps, Photos, Messages, Flights, YouTube, etc.) and open up third-party extensions that can use Adobe Firefly to complete the text-to-graph feature. And combined with Google's newly announced Gemini multimodal model, providing similar, multimodal capabilities to GPT-4's map-recognition capabilities. Currently, developers can access PaLM 2 through Google's PaLM API, Firebase, and Colab.

Despite the progress, Bard still seems to be a small step behind relative to the competition, and these feature updates to Bard were announced almost all over again for Microsoft's New Bing not too long ago. But how much can first-mover advantage determine in the battle for the big model?

Looking at Microsoft's first quarter 2023 earnings, data from market research firm Sensor Tower shows that Bing's traffic soared 16% six weeks after Microsoft announced it would include ChatGPT in search. In Microsoft's latest Q1 2023 earnings report, Microsoft's search and news ad revenue grew 10% year-over-year, both beating analysts' expectations.

Although Bing's traffic rose sharply, Google's earnings report for the same period did not show a significant decline in its search business. As already in the search engine market occupies the absolute advantage of Google, "easy to fight the mountain, keep the mountain is difficult", Google search may not be as Bing as the need to rely on AI capabilities to fight the position. For the search engine market leader, the need is conservative and stable, as little as possible when the debut of Bard "rollover" problem, may be the key to retain the market position at this stage.

And in the search engine field long after the AI battle, and is not anxious to the moment the product first, more or to see the AI big model of the underlying ability, the fight is PaLM and GPT who evolved faster.

From the developer's point of view, Google's big model is more friendly to small and medium-sized developers, as well as enterprise users. PaLM 2 contains four models with different parameters, including Gecko, Otter, Bison and Unicorn, and fine-tunes the data in specific areas to perform certain tasks for enterprise customers.

One of them, the Gecko model, can even be run directly on cell phones. Not only does this reduce development costs, but it also expands the possibilities for application development. AI applications that do not require binding to cloud services not only significantly reduce the cost of using LLM, but also maximize the security of local data.

Of course, for enterprise users, the PaLM 2 model is easier to implement, with different mini-models developed for different scenarios. Currently, more than 70 product teams within Google are already building products using PaLM 2. Two of these industry models were demonstrated at I/O: Sec-PaLM for security, which focuses on analyzing and interpreting potentially malicious scripts; and Med-PaLM 2 for healthcare, which can pass the US medical licensing exam and even synthesize patient information from medical images. Soon, both models will be available to select customers through Google Cloud.

From search to enterprise applications, in aggregate, in the next AI race. Google and Microsoft, which can compete first, is still difficult to determine.

Can Android ecology become a barrier to Google AI?

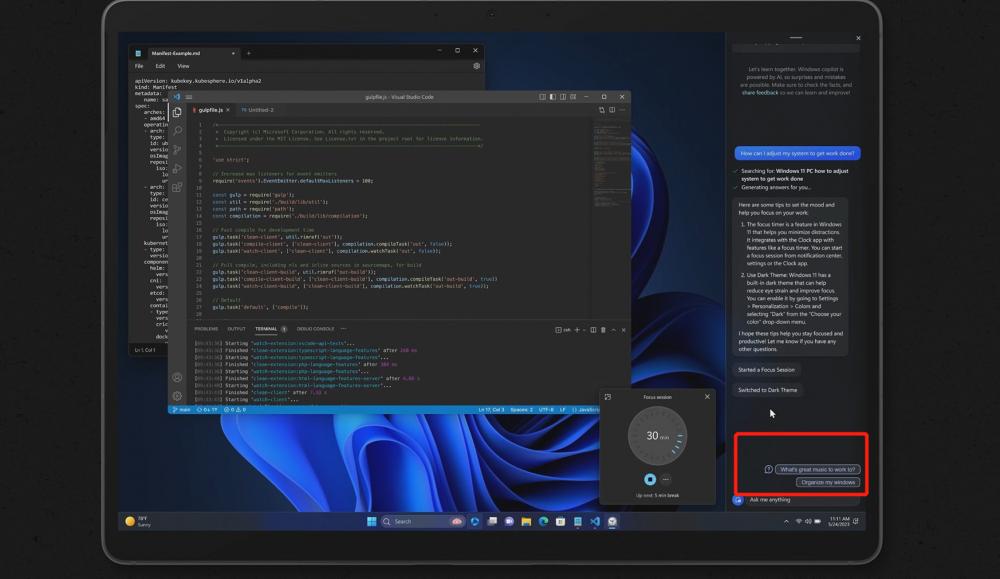

One of the highlights of this conference is Google's integration of AI features on Android.

Google's latest announcement of Android 14 also incorporates two AI features: Magic Compose can help users rewrite sentences with generative AI; Android will also have the ability to make personalized wallpapers with 3D effects using generative AI.

Android messaging features

In addition to application features, Google has also launched Studio Bot, an AI coding robot developed specifically for Android, which not only generates code, fixes bugs, but can even answer questions about Android application development. It also supports Kotlin and Java programming languages, and will be embedded directly into the toolbar of Android Studio development tools.

This is perhaps the most important move of Google AI.

At present, New Bing and Bard both open plug-in system around the search engine, and both mentioned that they will combine with OpenTable to book restaurants and Instacart to order and deliver to provide more extendable services for users.

However, no matter how many plug-ins are open, this kind of plug-in function can only be used for search engine, there are some limitations.

For users and service providers, the plug-in model can achieve limited functionality and insufficient cross-platform integration, which may eventually lead to increased operational complexity for users or reduce the cross-platform service capability of service providers. For example, you can complete all the bookings for a trip in Ctrip, but if you split the flight booking, hotel booking and restaurant booking into 3 plug-ins, the operation is bound to be tedious.

In addition, may also encounter the plug-in developer ecological problems. On the one hand, the search engine as the basic platform of openness can meet the needs of developers, on the other hand, but also based on the search engine AI platform to define application scenarios, training developers. This inadvertently creates a development threshold for AI ecology.

And for Google, in this regard, although the plug-in ecology is just starting. But Google, after all, has a huge community of developers in the Android ecosystem, and if AI capabilities are added to it, it will be able to build a prosperous AI ecology faster. The Android-based AI application ecosystem may build a moat of generative AI for Google one step ahead of Microsoft.

However, some people think that AI big model may not form an OS-level ecology. AI industry analyst Cang Jian told Tiger, "AI big model may be more like super APPs like WeChat and Alipay, which is an application-based platform."

It is true that Google has a stronger ecosystem at the operating system level and has gathered a large number of quality developers. But the rise of lightweight applications such as WeChat applets also shows that plug-in applications with "Super App" as the entrance have their own advantages.

First of all, the development cost of plug-ins is much lower. The cost of launching a small program in WeChat is certainly much lower than the app on the app store, and now some small program development price is even cheaper than some H5 (web page). Secondly, applets are more convenient in realizing single point functions. For LLM, if you don't need complicated operation, just input in natural language to get the result, then indeed there may be no need to develop a complicated app.

And compared to the app, the search engine as the entrance of the traffic bonus, perhaps also to a certain extent to cover the lack of functional simplicity. From this point of view, although the Android ecology may be the first step for Google to gather the popularity of AI developers, but around the search engine plug-in battle, should also be in the two giants, and even more future entrants in the continued fermentation.

Comments0