At a time when AI regulation is an increasingly high-profile topic around the world, OpenAI CEO Sam Altman said in a new interview that an AI agency similar to the International Atomic Energy Agency could be created, allowing everyone to participate in a global regulator of extremely powerful AI training systems.

The interview took place on May 9 as part of the Sohn 2023 conference, a major annual conference for the hedge fund community. A few days later, the organizers, the Sohn Conference Foundation, released the full video of the interview. In the interview, Altman also talks about how he uses ChatGPT, the respective characteristics and development paths of open source AI and closed source AI. He believes that reinforcement learning with human feedback (RLHF) is not the right long-term solution.

The following is part of the interview:

Patrick Collison (co-founder and CEO of payment platform Stripe): What is the ChatGPT feature you use most often, when you are not testing something and really want to use it, when ChatGPT is totally your tool.

Ottoman: Without a doubt the summary digest. I don't know how I would have continued to work without it. I probably couldn't handle email and Slack (instant messaging software). You know, you can send a bunch of emails or Slack messages to it.

Hopefully, over time, we'll build some better plugins for this scenario. But even manually it works pretty well.

Collison: Are there any plug-ins that have become part of your workflow?

Ottoman: I use a code interpreter occasionally. But to be honest, for me personally, they haven't really seemed like part of my daily habits.

Collison: Some people think we're close to the full extent of the Internet with the training data we have now, and you can't increase that number by two orders of magnitude, and I think you might counter that by saying, yes, but (there's also) synthetic data (data generated artificially through computer programs) generation, do you think the data bottleneck is important?

Altman: I think, as you mentioned earlier, as long as you can reach that synthetic data critical mass and the model is smart enough to produce good synthetic data, I think it should be fine.

We definitely need new technology, and I don't want to pretend that we don't have that problem. The approach of simply extending Transformer to get training data from the Internet in advance would fail, but that's not our plan.

Collison: So one of the big breakthroughs in GPT-3.5 and 4 is RLHF (reinforcement learning with human feedback). If you, Sam, did all the RLHF yourself, would the model become significantly smarter? Does the person giving the feedback matter?

Altman: I think we're getting to a point where, in some areas, you really need smart experts giving feedback to make the models as smart as they can be.

Collison: As we consider all the AI security issues that have now come into focus, what are the most important lessons we should learn from the experience of nuclear nonproliferation?

Altman: First, I think it's always a mistake to borrow too much from previous technologies. Everybody wants to find an analogy, everybody wants to say, oh, this is like this, or that, we did this, so we'll do it again, but each technology takes a different form. However, I think there are some similarities between nuclear materials and AI supercomputers, and that's something that we can learn from and gain insight from, but I would caution against overlearning the lessons of the last thing. I think it would be possible to create an AI agency similar to the International Atomic Energy Agency (IAEA) - I know how naive that sounds and how difficult it would be to do so - but I think getting everyone involved in a global training system for extremely powerful AI regulators, that seems to me to be a very important thing. I think that's one of the lessons we can learn.

Collison: If it gets set up and exists tomorrow, what should it do first?

Altman: Any system that exceeds the threshold we set - the easiest way to achieve this is to calculate the threshold (the best way is the capability threshold, but that's much harder to measure) - any system that exceeds that threshold, I think should be audited. The organization should be given full visual access to require certain security assessments to be passed before releasing the system. That would be the first thing.

Collison: One of the big surprises for me this year has been the progress of the open source model, especially the crazy progress in the last 60 days or so. How good do you think the open source model will be in a year?

Altman: I think there will be two developments here, the best closed-source models at super scale, and the progress of the open-source community, which may be a few years behind, but I think we're going to live in a world where there are very powerful open-source models, and people are going to do all kinds of things with them, and the creativity of the whole community is going to amaze us all.

Collison: Maybe mega models are scientifically very interesting, and maybe you need them to do things like push AI forward, but for most practical everyday cases, maybe an open source model will be enough. How likely do you think this future is?

Altman: I think for a lot of things that have super economic value, these smaller open source models will be enough to use. But you just mentioned one of the things I wanted to talk about, and that is helping us invent superintelligence, and that's a very economically valuable activity, like curing all cancers or discovering new laws of physics, and so on, which will be implemented by the largest models first.

Collison: Should Facebook open source LLaMa now?

Altman: At this point now, it probably should.

Collison: Should they open source their base model/language model, or just LLaMa?

Altman: I think Facebook's AI strategy is only chaotic at best, but I think they're starting to get very serious now and they have very capable people, and I expect they'll have a more coherent strategy soon. I think they're going to be a surprisingly really new player.

Collison: Are there any new findings that might significantly change your estimate of the likelihood of a catastrophic outcome, either by raising or lowering it?

Altman: Yes, I think there's a lot, and most of the new work between now and superintelligence will make that probability go up or down.

Collison: So, what are you particularly concerned about? Is there anything in particular that you'd like to know about?

Altman: First of all, I don't think reinforcement learning with human feedback (RLHF) is the right long-term solution. I don't think we can rely on it. I think it's helpful, and it certainly makes these models easier to use. But what you really want is to understand the inner workings of the model and to be able to align it, for example, to know exactly which circuit or set of artificial neurons is doing what, and to be able to tune it in a way that gives a stable change in the performance of the model.

There's a lot more beyond that, but if we could work reliably in that direction, I think the probability of disaster for everyone would be dramatically reduced.

Collison: Do you think there's enough interpretable work being done?

Ortman: No.

Collison: Why not?

Altman: Most of the people who claim they're really worried about AI security seem to just spend time on Twitter saying they're really worried about AI security, or doing a lot of other things.

There are people who are very worried about the security of AI and are doing good technical work. We need more people like that, and we're definitely putting more effort inside OpenAI to get more technical people working on this problem.

But what the world needs is not more AI security people tweeting and writing long-winded rants, but more people who are willing to do the technical work to make these systems align safely and reliably. I think these things are happening with a combination of good machine learning researchers shifting their focus, and new people coming into the field.

Collison: It seems like soon we will have agents that you can talk to in a very natural way, with low-latency full duplex (Full Duplex, which allows simultaneous two-way data transfer between two devices). Obviously, we've seen products like Character and Replica, and even these early products have made pretty amazing progress in this direction.

I think these could be a huge achievement, and perhaps we are greatly underestimating it, especially once you can have a conversation via voice. Do you think that's right? And if so, what do you think the possible consequences are?

Altman: Yes, I do think that's right.

Someone recently made an impressive comment to me that they were fairly certain that their children would have more AI friends than human friends in the future. As for the consequences, I don't know what that will look like.

One thing that I think is important is that we establish a social norm as soon as possible. You know, if you're interacting with an AI or a human, or something like that weird AI-assisted human situation, people seem to have a hard time distinguishing in their minds (whether it's a human or an AI), even with early retarded systems like Replica that you mentioned.

Whatever the circuitry in our brains that craves social interaction, it seems that some people can satisfy that need in some cases by spending time with AI friends.

Collison: Someone recently told me that one of the discussion threads I read on Replica's Reddit was how to deal with the emotional challenges and trauma of upgrading to the Replica model. Because your friend suddenly becomes a little bit intellectually impaired, or at least a little bit of a different person, and, as all of these interlocutors know, Replica is actually an AI, but somehow, our emotional responses don't seem to make much difference.

Altman: Most people feel that we're moving toward a society where there's a floating supreme superintelligent being in the sky. And I think that's a society that's less scary in a sense but still a little bit weird, a society that's integrated by a lot of AIs and humans together.

There's been a lot of movies about this, like the character C3PO in Star Wars or whatever you want AI to be. people know it's AI, but it's still useful, people still interact with it, it's kind of like a cute person, even though you know it's not a person.

And in a world where we have a society where a lot of AI is integrated into the infrastructure along with humans, it feels easier for me to deal with and less scary.

Collison: For OpenAI, obviously you want to be and are a preeminent research organization. But with regard to commercialization, is it more important to be a consumer company or to be an infrastructure company?

Altman: As a business strategy, I'm all for platforms plus top applications. I think this has been successful in many companies for good reason. I think the consumer products we develop help improve our platform. I hope that over time we can find ways for the platform to improve consumer applications as well. I think it's a good coherent strategy to do that at the same time.

But as you point out, our goal is to be the best research organization in the world, and that's more important to us than any productization. We've built an organization like this to be able to keep making breakthroughs, and while not all of our attempts will be successful, and we've taken some detours, we've found more paradigm shifts than others, and I think we're going to make the next big breakthrough here. That's the focus of our efforts.

Collison: What's the best non-OpenAI AI product you've used?

Altman: Frankly, I can't think of any other products. I have a rather narrow view of the world, but ChatGPT is the only AI product I use every day.

Collison: Is there an AI product that you wish existed, that you think our current capabilities or upcoming capabilities make possible, and that you look forward to?

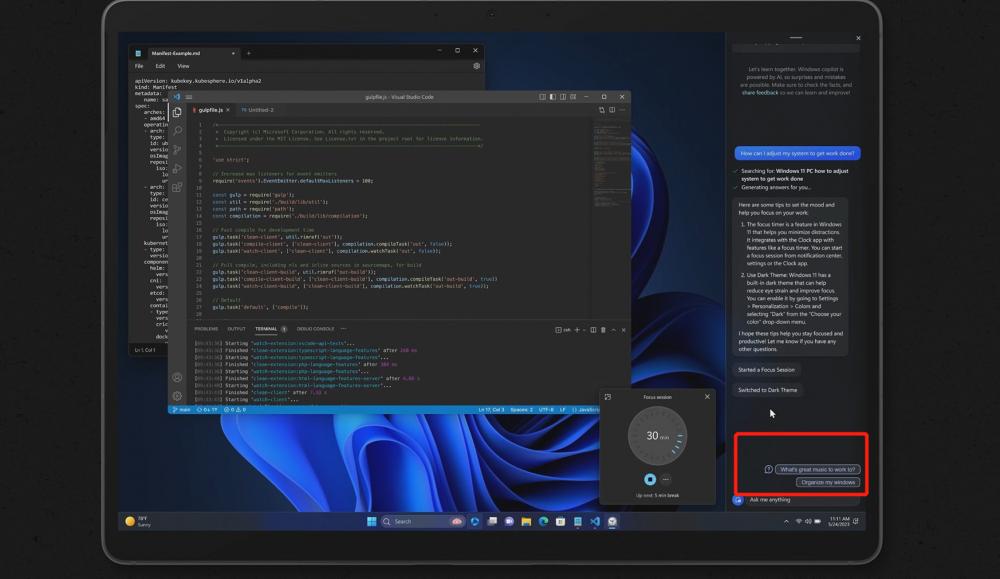

Altman: I'd like to have something like a "co-pilot" that can control my entire computer. It could look at my Slack, email, Zoom, iMessage, and huge to-do lists and documents, and do a lot of my work.

Collison: OpenAI has done a great job of raising money, has a very specific capital structure, deals with nonprofits and Microsoft, etc. Are these peculiar capital structures underrated? Should companies and founders be more open to thinking about it?

Altman: I suspect not, I suspect that innovation in this area can be a terrible thing, and you should be innovating in terms of products and science, not in terms of corporate structure.

The problems we faced took a very peculiar form, and despite our best efforts, we had to take some strange measures, but overall it was an unpleasant experience and a time-consuming process.

For other projects I've been involved with, it's always been a normal capital structure, and I think it's better that way.

Collison: Are we underestimating how important capital is? A lot of the companies you're involved with need a lot of capital. Probably OpenAI is the company that needs capital the most, although who knows if there are others that need it more.

Are we underestimating the limits of capital on unrealized innovation? Is this a common theme across the various efforts you're involved in?

Altman: Yes, I think it's a problem that basically all four of the companies that I'm involved with, except for the ones that are just writing checks as investors, need a lot of capital.

Collison: Do you want to list those companies? For the audience in the room.

Altman: OpenAI and Healing are the projects that I spend the most time on, and then there's Retro and Worldcoin.

But you know, all of those companies raised at least nine figures before any product came out, and that number is even bigger for OpenAI, all of them raised nine figures in their first round of funding or before they released their products, and they all took a long time, took years to release their products.

I think there's a lot of value in being willing to do something like that, and that approach sometime fell out of favor in Silicon Valley.

I understand why, and companies like those that only need to raise a few hundred thousand or a million dollars to be profitable are great too, but I think we've leaned too far in that direction, and we've forgotten how to make high-risk, high-return, massive capital and time-intensive investments, and there's value in those too, and we should be able to support both models.

Collison: It goes to the question of why there isn't more Elon Musk.

I guess the two most successful hardware companies in the last 20 years (SpaceX and Tesla) were both started by the same person in a broader sense. This seems like a surprising fact.

Clearly, Elon is unique in many ways. But what's your answer to that question, do we like him in this particular case, does the capital story fit with the clues you're talking about, and if you were to create more SpaceX and Tesla in the world, you might try to do that in your own work, but what would you try to change if you had to push that direction in a systematic way?

Altman: I've never met another Elon, I've never met anyone who I think could easily be groomed to be another Elon. He's a strangely unique kind of character. I'm glad he exists in the world; but, you know, he's also a complex person. I don't know how to create more people like him, I don't know. I don't know what your idea of how to create more is, and I'm curious.

Collison: Which companies that are not considered AI companies will benefit the most from AI in the next five years?

Altman: I think there's an investment vehicle that will figure out how to leverage AI to be an extraordinary investor and have insane excess returns, so like Rentech that's leveraging these new technologies.

Collison: So, which (public) companies do you see?

Altman: Do you see Microsoft as an AI company?

Collison: For that question, let's assume the answer is no.

Altman: I think Microsoft will transform itself in almost every way through AI.

Collison: Is that because they take the problem more seriously, or is it because the nature of Microsoft makes them particularly well suited to the problem and better able to understand it?

Altman: They recognized the problem earlier than anyone else and took it more seriously than anyone else.

Comments0