A key European Parliament committee of parliamentarians has approved an unprecedented regulation on artificial intelligence, bringing it closer to what will become law.

The approval marks a milestone for the authorities to regulate artificial intelligence, which is developing at an alarming rate. Known as the European Artificial Intelligence Act, the law is the first in the West to address artificial intelligence systems.

These regulations also specify requirements for providers of so-called "base models" such as ChatGPT. ChatGPT has become a key concern for regulators, given the extent to which these models are being developed and concerns that workers will be replaced.

The AI Act classifies the use of AI into four levels of risk: unacceptable risk, high risk, limited risk, and minimal or no risk.

By default, unacceptable risk applications are prohibited and cannot be deployed in the community or in the enterprise.

They include.

- Artificial intelligence systems that use subliminal techniques, or manipulation or deception techniques to distort behavior

- Artificial intelligence systems that exploit vulnerabilities of individuals or specific groups

- AI systems used for social scoring or assessing trustworthiness

- Artificial intelligence systems that create or extend facial recognition databases through untargeted capturing

- Some applications of AI systems in law enforcement, border management, the workplace, and education

Some legislators have called for increasing the cost of these measures to ensure they cover ChatGPT, for which demand is being imposed on "base models" such as large language models and generative AI.

Linklaters lawyer Ceyhun Pehlivan said: "Providers of such AI models will be required to take measures to assess and mitigate risks to fundamental rights, health and safety, the environment, and the rule of law." "They will also be subject to data governance requirements, such as checking the suitability of data sources and possible biases."

It is important to emphasize that while lawmakers in the European Parliament have passed the bill, it is still some way from becoming law.

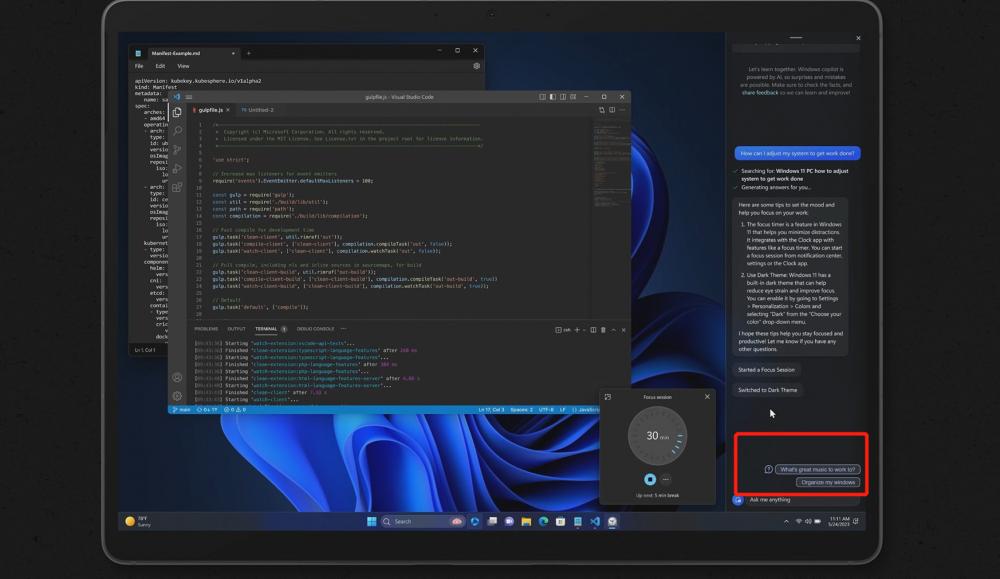

Private companies are being allowed to develop AI technology at a breakneck pace, giving rise to systems such as Microsoft-backed OpenAI's ChatGPT and Google's Bard.

Google recently announced a series of new AI updates, including a high-level language model called PaLM 2, which the company says outperforms some other systems on certain tasks.

New AI chatbots like ChatGPT have attracted many technicians and academics because of their ability to respond to user prompts in a human-like manner based on large language models trained on large amounts of data.

These regulations will inevitably raise concerns in the technology industry as well. The Computer and Communications Industry Association (CCIA) said it is concerned that the scope of the AI Act has been expanded too far and could cover harmless AI developments.

CCIA European policy manager Boniface de Champris (Boniface de Champris) said: "It is worrying to see broad categories of AI applications that actually pose very limited risk or none at all, but will now face strict requirements and may even be banned in Europe. "

"The European Commission's original proposal for an AI bill took a risk-based approach to regulating specific AI systems that pose a clear risk," De Champolis added.

Comments0